First, they came for our jobs. Now, they're coming for our APIs. Actually that's a good thing, as some of the API work that LLMs can do, even on the most simple endpoint, is really exceptional. In fact, it might be the best thing that's happened to API development since REST. I want to walk you through a feature development where we discovered that LLMs can make better API backends than traditional APIs themselves.

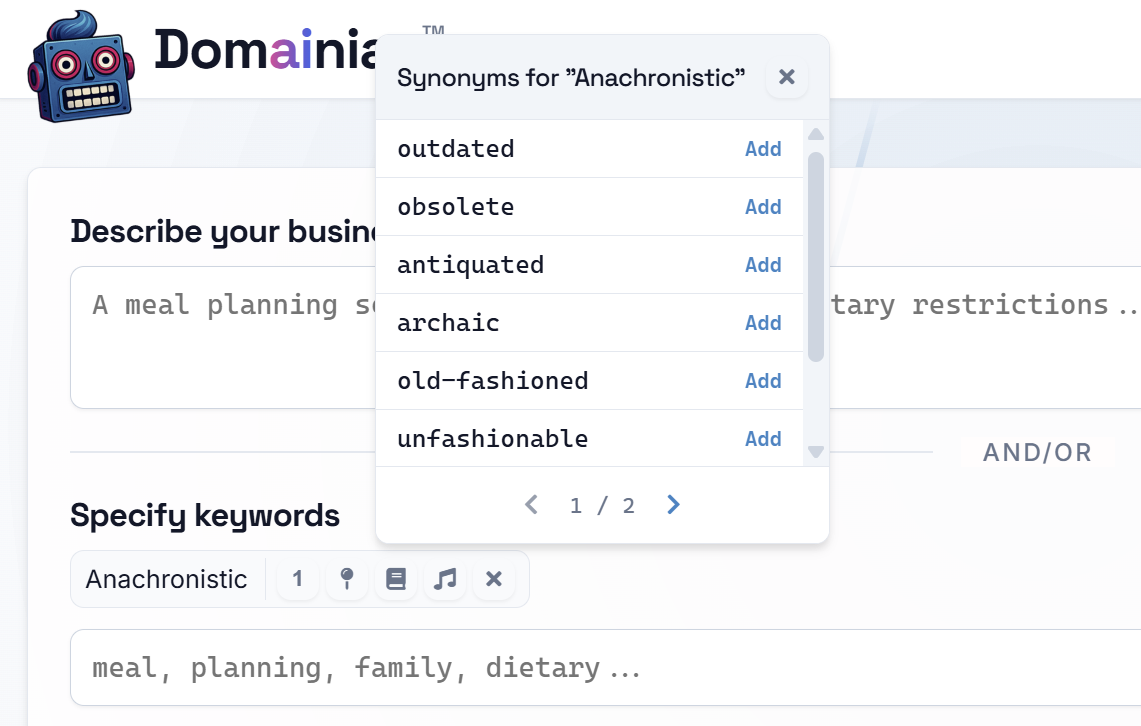

While building Domainiac, the AI-powered domain name search tool, we needed a simple feature: a thesaurus. When users search for a domain, we wanted to give them synonyms to help them brainstorm. "Creative" might be taken, but "imaginative.com" could be available. Simple enough, and here it is in action:

The API Search That Led Us Astray

Naturally, my first instinct was to find a thesaurus API. I spent a day evaluating services. The experience was exactly as frustrating as you'd expect. I found APIs that were slow, expensive, had restrictive rate limits, or returned inconsistent junk. Some required complex integrations or separate API keys. It was the usual roulette wheel of third-party dependencies; a mess of square pegs for a simple round hole.

After burning a frustrating day on this, we had a thought: what if we just asked an LLM to do it?

The result was so good it changed how I think about building certain API-backed features.

The LLM Is a Better API

We pointed our request at Google's Gemini 2.0 Flash model, and it blew every traditional API out of the water. The synonyms were more relevant, the response time was faster, and the quality was consistently better than most of the paid services we tested.

And here's the kicker: the model is free. Google offers a generous free tier that completely covers our use case at zero cost.

The feature is now a simple Cloud Function that returns structured JSON, just like any other API. But behind that clean interface, an LLM generates the data in real-time. There's no static thesaurus database and no third-party dependencies. Just a well-engineered prompt.

How We Built It

The implementation is surprisingly straightforward.

The Frontend Call

From the user's perspective, it's just a standard API call. Nothing clever here.

const getThesaurusFunction = httpsCallable(functionsInstance, 'getThesaurus');

const result = await getThesaurusFunction({

keyword: "creative",

lookupType: "synonyms"

});

The Backend

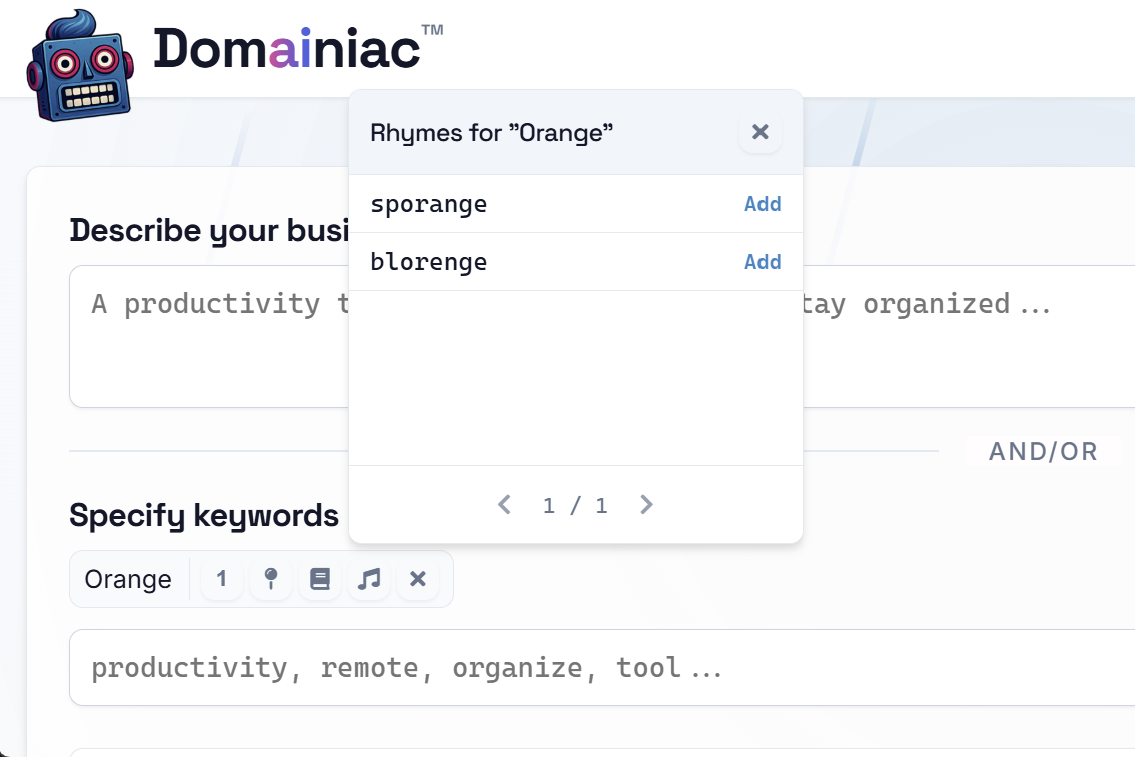

The backend just constructs a prompt and fires it at Gemini. When we wanted to add rhymes, we didn't need a new integration or data source. We just added a button and changed the prompt. That's it. I can't imagine the effort required to do this from first principles without an LLM. This is deliciously simple, we're not asking for an emoji-filled essay from the LLM; we're asking it for data in a specific JSON format. The LLM delivers.

I thought nothing rhymed with "Orange"?

Sporange is a botanical term for a structure that produces spores.

Blorenge is a prominent hill overlooking the valley of the River Usk near Abergavenny, Monmouthshire, southeast Wales.

Thanks Domainiac.

Making It Production-Ready

Of course, using an LLM as an API isn't as simple as just wiring up a prompt. A few key decisions made this reliable enough for production:

Validate Everything (Seriously)

LLMs can be unpredictable. We validate every response using a Zod schema to ensure it matches the structure we expect.

const thesaurusOutputSchema = z.object({

words: z.array(z.object({

word: z.string(),

category: z.string()

}))

});

This saved us headaches. Annoyingly, we found that Gemini would occasionally inject comments into the JSON. Having a strict schema and a cleanup step to strip non-JSON content was critical.

Cache Aggressively

Even free APIs have limits. We implemented a simple in-memory cache with a 5-minute TTL. The first request for "creative" hits Gemini; subsequent requests within five minutes get an instant response from the cache. This sped up performance for repeated clicks and handled weird edge cases.

Tune for the Task and Filter the Noise

We use temperature: 1.0 (maximum creativity) for the thesaurus to get a wide range of words. For our core domain generation, we dial it back for more predictable results.

We also do some simple post-processing to ensure data quality, like filtering out multi-word results that don't work for domain names.

const allWords = result.words.filter(item => {

const word = item.word.trim();

return word.length > 0 && !word.includes(' ') && word.split(/\s+/).length === 1;

});

The Prompt

Here's the core of the implementation. I'm simplifying slightly, but this is basically it:

const prompt = isSynonym

? `Generate as many single word synonyms as possible for "${normalizedKeyword}".

Order by most similar first.

OUTPUT FORMAT: {"words": [{"word": "example", "category": "synonym"}]}`

: `Generate as many single words as possible that rhyme with "${normalizedKeyword}".

Order by best rhymes first.

OUTPUT FORMAT: {"words": [{"word": "example", "category": "rhyme"}]}`;

const response = await ai.generate({

model: gemini20Flash,

prompt: prompt,

config: {

temperature: 1.0,

maxOutputTokens: 1024,

},

});

That's it. Two slightly different strings, one model, one endpoint. This is the power of treating LLMs as API backends: new functionality doesn't require new infrastructure; it requires better prompts.

The Bigger Picture: APIs Are Becoming Conversations

This pattern isn't limited to our trivial thesaurus, this unlocks a fundamental shift in how we build software. The rigid, endpoint-driven API is making way for something far more dynamic: the "intelligent endpoint." Instead of calling a microservice that does one thing, you'll call an LLM that can understand anything.

Think about the implications. Today, my thesaurus function returns synonyms. Tomorrow, with a one-line prompt change, it could return translations, generate marketing copy, or even suggest Cockney rhyming slang if I wanted it to. The underlying infrastructure doesn't change, and coding it is FAST.

This isn't just about users clicking buttons. We are increasingly seeing autonomous AI agents that will orchestrate these intelligent endpoints constantly in the background. Your CRM won't just store data; an agent will be able to enrich a new user profile by asking an LLM a series of questions, replacing a dozen bespoke API integrations. Your support system won't just log tickets; an agent will be able to summarize a user's problem, query a knowledge base via natural language, and draft a solution, all before a human ever sees it. The integrated technology ecosystem that works will be one that knows how to communicate effectively with all parts of itself.

These aren't just one-off calls. This will be a constant, silent hum of AI agents talking to AI endpoints, chaining together complex tasks, all triggered by a single user action or system event.

The Takeaway for Tech Leaders

The traditional API landscape is changing. For a growing class of problems, the best solution isn't another third-party integration, it's a well-crafted prompt with a validation schema.

This completely upends the "build vs. buy" calculation. Why spend weeks building a service when a free, state-of-the-art model can do it with a ten-line prompt? For us, the little thesaurus feature was a clear win: better results, faster responses, zero cost, and less complexity. That's not just good architecture; that's a massive competitive advantage when rolled out on an industrial scale to an enterprise.

We used to spend our time building rigid APIs that passed structured data. The next phase of our job will be orchestrating conversations between AIs that can understand intent and generate structured data on the fly from disparate ecosystems. Finally my umbrella will know that it's raining.

Check out Domainiac to see this in action. Add some keywords and click the thesaurus icon. You'll be using an LLM-backed API without even knowing it.